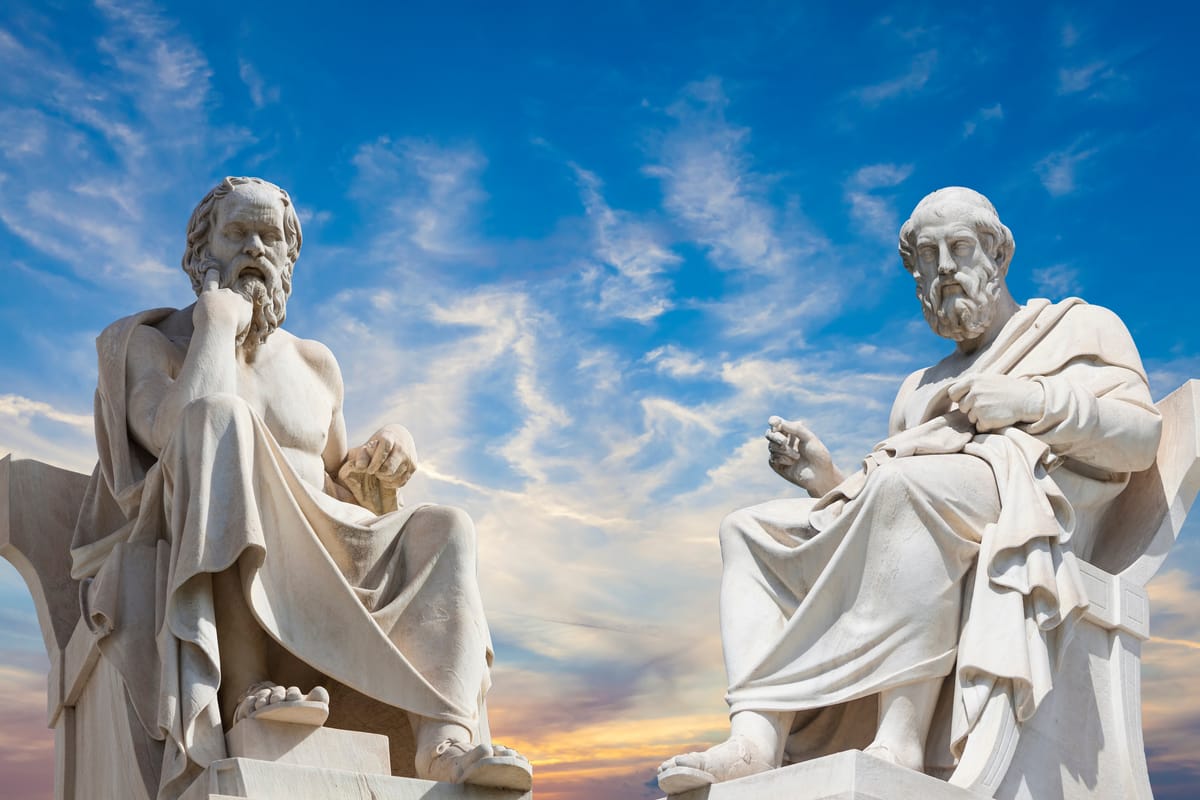

What Would Plato, Socrates, and Seneca Say About AI Today?

AI promises progress, but also casts shadows. From Plato’s cave to Seneca’s discipline, see how ancient wisdom still guides our relationship with technology.

Are We Just Staring at AI Shadows? Ancient Warnings for a Modern Age

“Wonder is the beginning of wisdom.” - Socrates

I’ve always had a passion for the ancient philosophers. Sometimes when I’m out walking my dog, I’ll find myself diving into their dialogues, listening to their words transport me into another world. A world of wisdom and sharp-edged questions that still make my head hurt in the best way. I imagine conversations with them , Socrates pressing me with questions I can’t answer, Plato painting metaphors that twist into riddles, Seneca reminding me to breathe and let go of what I can’t control.

So it’s only fitting that I wonder: what would these great minds say if they saw our age of artificial intelligence, with its endless interaction between human and machine?

I’ll admit it: I use AI every day. Not as a crutch, but as a tool. I guide it, I question it, and I treat it like an assistant for time-saving tasks , helping me think faster, draft smarter, and grow. But here’s the paradox: the more I use it, the more I realize how easily it could replace the very habits that make growth possible.

That’s where the ancients come in. Because artificial intelligence may look like the cutting edge of progress, but the warnings it raises are as old as philosophy itself.

Plato once warned us about mistaking shadows for truth. Socrates argued that knowledge without examination was empty. Seneca urged calm in the face of forces beyond our control.

What the Ancients Really Valued

If I could walk into the Agora of Athens with an iPhone in hand, I don’t think Plato, Socrates, or Seneca would marvel at the device. They’d look past the glowing screen and ask: “What is this doing to your mind?”

For the Greeks and Romans, wisdom wasn’t about speed or scale, not the fastest answer, not the biggest library. It was about the slow, deliberate growth of the soul through questioning, reflection, and virtue.

Plato even worried that writing itself would weaken memory, creating minds that looked full but were hollow. Imagine his reaction to ChatGPT finishing your thoughts before you’ve even finished typing them.

Their suspicion wasn’t about tools themselves but about what tools quietly do to us. And that’s the lens we should use with AI today.

Plato: Shadows on the Wall

Plato’s Allegory of the Cave warned of people staring at shadows and mistaking them for reality. Swap shadows for AI outputs, and the metaphor is painfully relevant.

The danger is not that AI is wrong, it’s that it’s too convincing. When we confuse simulation for truth, we weaken our capacity to think, to question, and eventually to learn.

I saw this firsthand in a boardroom not long ago. An outside consultant swept in, pitching AI not just as the sun, but the whole solar system, every planet, every orbiting moon, neatly revolving around the promise that AI could solve all the company’s problems. It could create this, analyze that, and generate answers to everything.

Being a long-time consultant myself, I had to step in before he left our galaxy entirely. “Hold on,” I said, “let’s not get ahead of ourselves. Yes, AI is powerful in certain tasks, but keep in mind, it’s an unstoppable algorithm of pleasing, mixed with hallucinations.”

The room went quiet. I tossed in “hallucinations” deliberately, suspecting this self-proclaimed expert had no idea what it meant in the AI context. I was right. He froze, and the executives around the table looked equally blank. That was the moment I realized just how dangerous blind adoption can be, not because AI is inherently evil, but because even the so-called experts can misunderstand it, and yet still sell it with absolute confidence.

Consider the case in March 2023 when a deepfake image of Pope Francis wearing a puffer jacket went viral. The image, crafted with AI, fooled many people into believing it was real. That moment wasn’t just amusing, it was a powerful warning, or perhaps a kind of foreshadowing. It was Plato’s Cave in 4K with shadows on the wall so sharp and convincing that even the wise could mistake them for truth.

Plato’s Safeguards

And that’s what unsettled me most: if even the sharpest among us can be fooled, where are we heading? Plato’s lesson is clear; we must demand more than flickering shadows on the wall. We must demand to see the foundations, what data trains these systems, how their reasoning is constrained, what safeguards guide their outputs, and where the boundaries of their use must be drawn.

Safeguards aren’t just technical fixes; they are philosophical guardrails. Plato would argue that knowledge without examination is no knowledge at all, and AI without scrutiny is no different. Transparency about training data tells us whose voices shape the machine’s “truth.” Clear reasoning paths help us separate genuine insight from polished illusion. Boundaries of use prevent tools designed for assistance from sliding quietly into manipulation or control.

We’ve already seen what happens without them. Deepfakes erode trust in images. Recommendation engines amplify outrage because it’s profitable. Generative models can produce convincing lies faster than we can fact-check them. These are the shadows Plato warned about, alluring but dangerous, comforting but deceptive.

So, the safeguard is not simply technical; it’s moral.

I can almost hear Plato leaning in:

“Tell me, he would ask, “is this machine bringing you closer to truth, or merely casting brighter shadows upon the wall? For intelligence that dazzles but does not enlighten is no wisdom at all. Do you embrace the shadows, or do you walk toward the light?”

Enjoying the journey so far?

If you like deep dives into creative chaos, productivity under pressure, and nerdy lessons from real-life experiments, subscribe to get future posts delivered right to your inbox. Subscribe Now

Socrates: The End of Questioning?

Socrates thrived on questions. He believed the unexamined life was not worth living, and that the struggle of inquiry was the path to true understanding. But AI tempts us to outsource that very struggle. Why wrestle with a problem when a chatbot can hand you a neat, packaged response?

The danger is not that AI makes us dumber, but that it makes us stop the inquiry that makes us wise. When companies release new models, we often debate which is better, faster, smoother, more accurate. But this is surface chatter. The deeper, more uncomfortable Socratic questions are: “What is the true purpose of these systems? Whose interests do they really serve? What values are baked into the data that train them, and what harms could they amplify if scaled?”

Socrates wouldn't just ask these questions; he would use the AI's own outputs to reveal our contradictions. He’d engage in his method of elenchus: “You say you value originality, yet you use a tool that remixes the past. Explain this.” Or “You claim this tool liberates your time, yet you fill that time with more digital consumption. What is the true goal of this freed time?” His goal wouldn’t be to get an answer from the machine, but to show us that we haven’t thought deeply enough about our own values.

And this forces us to confront the elephant in the server room, which isn’t just humming, it’s roaring. Its name is not just profit, but power. The motives are a complex mix of revenue, strategic dominance, and influence. Companies like OpenAI and Meta cloak their ambitions in mission statements about “benefiting humanity,” yet their actions, refusing to disclose training data, amplifying outrage for engagement, and rushing out products to beat rivals, often directly contradict these professed virtues. Socrates would be fascinated by this gap between stated virtue and actual action. He’d sit across from these executives, not to be dazzled by demos, but to probe this exact contradiction: “You call this tool wise, yet you built it in secrecy. How can a thing that hides its origins lead us to truth?”

Socrates’s Safeguard

His gift was forcing people to face the inconsistencies in their own reasoning. Our safeguard must be the same: to relentlessly question both the tool and the motives of its creators. We must not accept mission statements at face value. We must demand that their virtues align with their actions. The goal is to keep the gadfly alive, to never outsource the discomfort of true inquiry.

His voice echoes, carrying that familiar, probing irony:

“So, you call it wisdom when a machine answers for you? Then tell me, if you never understand the question yourself, what exactly have you learned?

Seneca: Calm in the Machine Age

Seneca the Younger (Lucius Annaeus Seneca), the Stoic philosopher, writing in a Rome buzzing with ambition, wealth, and distraction, urged a focus on what lies within our control. His advice is a stark antidote to digital panic: “We suffer more in imagination than in reality. Why lose your peace over what a machine can or cannot do? You command your mind, not its algorithms.”

If Seneca observed our AI age, I doubt he’d panic about robot uprisings. He’d identify a more insidious threat, us voluntarily surrendering our judgment. The greater danger isn’t AI becoming a god, but it turning us into passive consumers, outsourcing our reason, creativity, and attention to glowing screens.

This is the modern “attention economy,” and it is the ultimate Stoic battleground. Tech companies are engineering systems to seize the one thing Stoics said was truly ours, our conscious attention and judgment. When you’re doomscrolling, you don’t notice your agency slipping away because the machine is choosing for you. Social Media’s endless scroll doesn’t force you to watch; it nudges, hypnotizes, and consumes your most finite resource, time. This is precisely the kind of quiet surrender Seneca warned against in On the Shortness of Life, the tragedy of squandering our lives on distractions chosen for us by others.

He’d also warn against outsourcing our virtues. Leaning on AI for painting, songwriting, or comedy might be clever, but if we abdicate creativity itself, we dull the spark that allows us to practice expression, perseverance, and originality, core aspects of a virtuous life.

Seneca’s Safeguard

Seneca would argue the safeguard isn’t to fight the tool, but to master ourselves in its use. He saw technology as wealth or power: neutral but corrupting if we hand over our minds. Our freedom lies in disciplined use, not in blind delegation. Use AI but never follow its outputs as if they were fate.

But he would also challenge the feeling of powerlessness. We may not be in the labs of Big Tech, but we are not without agency. Ordinary Romans couldn’t control the emperor’s decrees, but they could choose how to live with integrity under them. Our duty is the same, to exercise restraint ourselves, to guard our attention, and to demand ethical boundaries. If the tech giants push without virtue, then we must champion it all the more fiercely, keeping our reason and humanity intact. History shows that empires built on hubris eventually falter. The empire of technology will be no different.

His voice carries like a reminder across centuries:

“You cannot hand your mind to a machine and still call yourself free. They may own the algorithm, but they do not own your attention. Guard it, for it is the essence of your life.”

Closing Thought

AI should not be ignored, nor abolished. It should be embraced as a partner, a tool to help mankind grow. But never an adversary, and never a god.

At the end of the day, we created it. We guide it. We decide what it becomes. Let gods be gods, and humans be humans. Let not humans forge gods of silicon that think for themselves.

The pursuit of an unfettered “singularity” is a dangerous gamble, not an inevitable destiny. Our focus must shift from blind acceleration to unwavering responsibility in ensuring the tools we create are built with safeguards that align with our survival and growth, not against them.

Socrates said: “The unexamined life is not worth living.”

The same is true of unexamined technology. If we examine not just what AI can do, but what it is doing to our attention, our creativity, our society, then we will heed the ancients' warning. Plato's shadows on the wall are more convincing than ever, but the path to the light remains the same. It's our responsibility to prevail through questioning, through virtue, and through the relentless protection of our own humanity.

Stay calm, stay human and but don’t let it AI steal the discipline of your own mind, your own art.